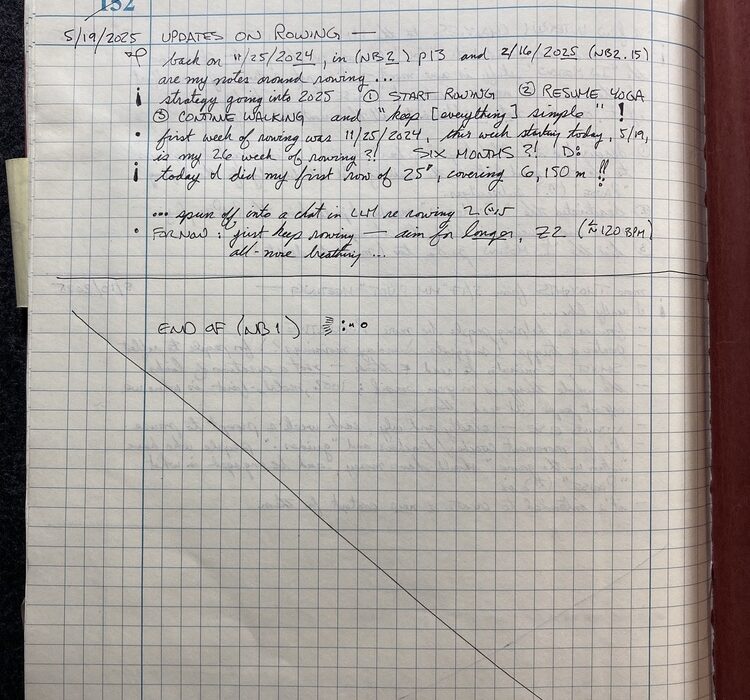

I’ve been doing 16:8 intermittent fasting for years and recently started 48-hour fasts — dropping about three pounds each fast, gaining one or two back, and trending steadily downward. I wanted to understand what the research actually says about what I’m doing to myself, so I worked with Claude (Anthropic’s AI) to produce this series. I set the structure, chose the topics, pushed back on claims that felt hand-wavy, and guided the editorial tone. Claude did the writing and research synthesis. My curiosity driving Claude’s research and prose.

Mental Clarity, BDNF, and Ketone Fuel

Research brief — what happens in the brain during extended fasting. The subjective experience of mental clarity is real and widely reported; the science behind it is more complicated than the popular narrative suggests.

The Clarity People Report

Many people describe a distinct shift during extended fasting — typically somewhere after the 24–36 hour mark — from brain fog to unusual mental clarity. This is one of the most consistently reported subjective experiences of fasting, across cultures and contexts. It’s real. The question is why.

Three candidate explanations, not mutually exclusive:

- Ketone metabolism — the brain runs efficiently on beta-hydroxybutyrate (BHB)

- BDNF upregulation — fasting may increase brain-derived neurotrophic factor

- Stable fuel supply — no more blood sugar fluctuations from meals

The first and third have strong physiological grounding. The second is where the science gets shaky.

Ketones as Brain Fuel

By 48 hours of fasting, the liver is producing ketone bodies — primarily BHB — as the body’s main fuel source. The brain, which normally relies heavily on glucose, can use BHB efficiently. Some researchers argue the brain actually runs more efficiently on ketones than on glucose in certain contexts, producing more ATP per unit of oxygen consumed.

This is part of the metabolic switch described in Part 1. (1) The shift from glucose to ketone-based energy isn’t just happening in muscles and liver — the brain is making the same transition, and the subjective experience of clarity likely tracks with this fuel switch completing. The “keto flu” brain fog (Part 2) happens during the messy transition; the clarity arrives once the brain has fully adapted to the new fuel source.

Evidence strength: Strong. The biochemistry of ketone metabolism in the brain is well-established.

BDNF — The Overhyped Claim

Brain-derived neurotrophic factor (BDNF) supports neuroplasticity, neuronal resilience, and the growth of new synaptic connections. Animal studies consistently show that fasting upregulates BDNF, which is why it’s frequently cited as a fasting benefit.

The human picture is much murkier.

A 2024 systematic review published in Medicina examined 16 human studies (from 2000–2023) on intermittent fasting, calorie restriction, and BDNF levels. The results were strikingly split: (2)

- 5 studies showed significant BDNF increase after fasting interventions

- 5 studies showed significant BDNF decrease

- 6 studies showed no significant change

That’s about as close to “we don’t know” as a systematic review can get. The review concluded that IF has “varying effects on BDNF levels” in humans.

A 2022 narrative review in Frontiers in Aging attempted to synthesize the neurotrophic effects of IF, calorie restriction, and exercise, and similarly found the human BDNF evidence to be inconsistent and insufficient for strong conclusions. (3)

Why the disconnect between animal and human data? Several possibilities:

- Animal studies typically measure BDNF in brain tissue directly; human studies rely on blood BDNF levels, which may not reflect what’s happening in the brain

- The fasting protocols studied in humans vary enormously (Ramadan fasting, alternate-day fasting, calorie restriction, time-restricted eating) — these may not all trigger the same neurological responses

- Measurement timing matters — when in the fasting/refeeding cycle you measure BDNF may produce different results

Honest assessment: The subjective mental clarity during extended fasts is real. Attributing it specifically to BDNF upregulation in humans is not supported by the current evidence. The more likely drivers are ketone metabolism (well-established) and the absence of postprandial blood sugar fluctuations (straightforward physiology).

Evidence strength: Strong in animals, weak and contradictory in humans. This is the weakest of the claimed fasting benefits in this series.

The Stable Fuel Supply

This is the simplest explanation and possibly the most underrated: when you’re not eating, your blood sugar isn’t spiking and crashing. The brain receives a steady supply of ketones instead of riding the glucose roller coaster. For anyone who normally experiences afternoon energy dips, post-meal drowsiness, or reactive hypoglycemia, the stable fuel supply of ketosis may account for much of the perceived clarity — not through some exotic neurotrophic mechanism, but simply by removing the disruptions.

Evidence strength: Physiologically obvious but rarely studied in isolation because it’s hard to disentangle from the other effects of fasting.

Sources

- de Cabo & Mattson 2019, NEJM — metabolic switching and brain ketone adaptation: https://pubmed.ncbi.nlm.nih.gov/31881139/

- 2024 systematic review, Medicina — IF and BDNF in humans (contradictory results across 16 studies): https://pubmed.ncbi.nlm.nih.gov/38276070/ (free full text: https://www.mdpi.com/1648-9144/60/1/191)

- 2023 narrative review, Frontiers in Aging — neurotrophic effects of IF, CR, and exercise: https://www.frontiersin.org/journals/aging/articles/10.3389/fragi.2023.1161814/full